Robots.txt Generator

About Robots.txt Generator

The robots file is a text file that is used to instruct web robots (commonly referred to as "bots" or "spiders") how to crawl and index pages on your website. The file is placed in the root directory of your website and tells bots whether they are allowed to access specific pages or directories on your site.

A robots txt generator is a tool that helps you create a robots.txt file for your website. It allows you to specify which pages or directories you want to block from being crawled and indexed by bots. This can be useful if you have pages on your website that you do not want to be indexed by search engines, or if you want to prevent certain types of bots from accessing your site.

To use a robots.txt generator, you typically enter the URL of your website and specify the pages or directories that you want to block. The generator then creates a robots.txt file with the appropriate instructions for bots. You can then upload the file to your website's root directory to start blocking bots from accessing the specified pages.

Some common uses for the robots.txt file include blocking sensitive or private pages, blocking pages with duplicate content, and blocking pages that are under construction or not yet ready to be indexed by search engines. By using a robots.txt generator, you can easily create a robots.txt file for your website and control how bots crawl and index your content.

What is Robots txt?

The robots.txt file is a text file that is used to instruct web robots (commonly referred to as "bots" or "spiders") how to crawl and index pages on your website. The file is placed in the root directory of your website and tells bots whether they are allowed to access specific pages or directories on your site.

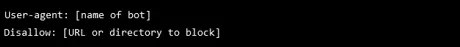

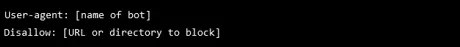

The robots.txt file uses a specific syntax to specify rules for bots. The basic format of the file is as follows:

For example, if you wanted to block all bots from crawling the "private" directory on your website, you could use the following rule in your robots.txt file:

This would block all bots from crawling any pages in the "private" directory on your website. You can also use the "Allow" directive to specify pages or directories that you want to allow bots to crawl.

It's important to note that the robots.txt file is only a suggestion to bots and is not a hard and fast rule. Some bots may ignore the instructions in the file and crawl and index pages that are blocked. Additionally, the robots.txt file does not provide any security or protection for your website, as it can be easily accessed and read by anyone.

Robots txt Example

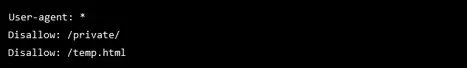

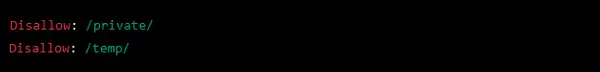

Here is an example of a robots.txt file that blocks all bots from crawling the "private" directory and the "temp" page on a website:

This robots.txt file specifies that all bots (indicated by the "*" wildcard character) should not crawl the "private" directory or the "temp.html" page on the website.

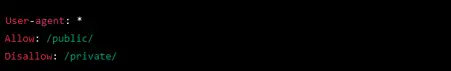

You can also use the "Allow" directive to specify pages or directories that you want to allow bots to crawl. For example, the following robots.txt file allows all bots to crawl the "public" directory but blocks them from crawling the "private" directory:

It's important to note that the robots.txt file is only a suggestion to bots and is not a hard and fast rule. Some bots may ignore the instructions in the file and crawl and index pages that are blocked. Additionally, the robots.txt file does not provide any security or protection for your website, as it can be easily accessed and read by anyone.

What Is Robot Text Generator?

A robots.txt generator is a tool that helps you create a robots.txt file for your website. It allows you to specify which pages or directories you want to block from being crawled and indexed by bots. This can be useful if you have pages on your website that you don't want to be indexed by search engines, or if you want to prevent certain types of bots from accessing your site.

To use a robots.txt generator, you typically enter the URL of your website and specify the pages or directories that you want to block. The generator then creates a robots.txt file with the appropriate instructions for bots. You can then upload the file to your website's root directory to start blocking bots from accessing the specified pages.

Some common uses for the robots.txt file include blocking sensitive or private pages, blocking pages with duplicate content, and blocking pages that are under construction or not yet ready to be indexed by search engines. By using a robots.txt generator, you can easily create a robots.txt file for your website and control how bots crawl and index your content.

Importance of Robots txt File:

The robots.txt file can be an important tool for controlling how bots crawl and index your website's content. Here are a few reasons why the robots.txt file is important:

- It allows you to specify which pages or directories you want to block from being crawled and indexed by bots. This can be useful if you have pages on your website that you do not want to be indexed by search engines, or if you want to prevent certain types of bots from accessing your site.

- It can be used to block sensitive or private pages from being indexed by search engines. If you have pages on your website that contain sensitive or confidential information, you can use the robots.txt file to block these pages from being indexed. This can help protect the privacy of your users and the security of your website.

- It can be used to block pages with duplicate content, which can help improve the overall quality of your website's content in the eyes of search engines. Duplicate content can be harmful to your website's SEO, as it can dilute the value of your website's content and make it less likely to rank well in search results. By using the robots.txt file to block duplicate pages, you can help improve the quality and relevance of your website's content.

- It can be used to block pages that are under construction or not yet ready to be indexed by search engines. This can be useful if you are in the process of updating or redesigning your website and do not want search engines to index unfinished pages.

Overall, the robots.txt file is an important tool for controlling how bots crawl and index your website's content. It is a simple and effective way to ensure that your website's content is presented in the best possible way to search engines and users.

Robots txt Generator Step by Step Guide

Here is a step-by-step guide for using a robots.txt generator to create a robots.txt file for your website:

- Go to www.onlineseotool.net/tool/robots-txt-generator

- Enter the URL of your website into the robots text generator tool.

- Specify the pages or directories that you want to block from being crawled and indexed by bots. You can use the "Disallow" directive to block specific pages or directories.

- If you want to allow certain pages or directories to be crawled and indexed, you can use the "Allow" directive to specify which pages or directories you want to allow.

- Once you have specified the pages or directories that you want to block or allow, the generator tool will create a robots.txt file with the appropriate instructions for bots.

- Download the generated robots.txt file to your computer.

- Upload the robots.txt file to the root directory of your website. This is typically the same directory where your homepage is located.

- Test the robots.txt file to make sure that it is working correctly. You can do this by using a tool like Google Search Console or by manually checking to see if the pages or directories that you specified in the file are being blocked from being crawled and indexed.

- If you need to make any changes to the robots.txt file, you can simply edit the file and re-upload it to your website's root directory.

By following these steps, you can use a robots.txt generator to create a robots.txt file for your website and control how bots crawl and index your content.

Robots txt Disallow :

A "robots.txt" file is a simple text file placed on a website to instruct web crawlers and other automated agents about which pages or sections of the site should not be accessed or indexed. The "Disallow" directive is used in the file to specify which pages or sections should not be crawled.

For example, if a website owner wants to prevent a specific page from being indexed by search engines, they would include a "Disallow" directive for that page in the robots.txt file.

No index robots txt :

A "robots.txt" file is a text file that website owners can use to instruct search engines how to crawl and index the pages on their site. If a site has a "no index" directive in its robots.txt file, it is telling search engines not to include those pages in their search results. This can be useful if a website owner wants to keep certain pages private or if they don't want certain pages to be indexed for SEO purposes.

Disallow all robots txt :

Disallow: /" directive in a robots.txt file is used to block all search engines from crawling and indexing any pages on the website. This can be useful if a website owner wants to temporarily or permanently prevent search engines from accessing their site, for example if the site is under construction or if they don't want their site to be indexed for any reason.

It's important to keep in mind that while the robots.txt file is a suggestion to search engines, it is not a guarantee that they will obey the instructions. Some search engines may ignore the robots.txt file altogether, and some advanced web crawlers may be able to bypass it.

It's also important to note that having a robots.txt file that disallows all search engines will not prevent your website from appearing in search results if someone has a direct link to your website.

Is Robots txt Good for Seo?

The robots.txt file can be useful for SEO in certain situations. For example, if you have pages on your website that you do not want to be indexed by search engines (such as duplicate pages or low-quality pages), you can use the robots.txt file to block these pages from being crawled and indexed. This can help improve the overall quality and relevance of your website's content in the eyes of search engines.

However, it's important to note that the robots.txt file is only a suggestion to bots and is not a hard and fast rule. Some bots may ignore the instructions in the file and crawl and index pages that are blocked. Additionally, the robots.txt file does not provide any security or protection for your website, as it can be easily accessed and read by anyone.

If you want to ensure that a page is not indexed by search engines, it is generally best to use the "noindex" meta tag rather than relying on the robots.txt file. The "noindex" tag tells search engines not to index the page, and is a more reliable way to prevent a page from being indexed. You can also use the "disallow" rule in the robots.txt file in combination with the "noindex" tag to block bots from crawling the page and prevent it from being indexed.

Advantages of Robots txt File

Here are some advantages of using the robots.txt file:

- It allows you to specify which pages or directories you want to block from being crawled and indexed by bots.

- It can be used to block sensitive or private pages from being indexed by search engines.

- It can be used to block pages with duplicate content, which can help improve the overall quality of your website's content in the eyes of search engines.

- It can be used to block pages that are under construction or not yet ready to be indexed by search engines.

- It is easy to use and requires no special technical skills.

- It is a free and simple way to control how bots crawl and index your website's content.

Robots Txt Generate by Using Notepad

- Determine which pages or resources on your website you do not want to be crawled by web robots. This could include pages that are under development, pages that contain sensitive information, or resources (such as images or files) that are not relevant to search engines.

- Open a plain text editor, such as Notepad or TextEdit.

- Type the following at the top of the file:

This line specifies that the rules that follow apply to all web robots.

- For each page or resource that you want to block from being crawled, add a line that begins with Disallow: followed by the URL of the page or resource. For example:

This will prevent web robots from crawling any pages or resources under the /private/ or /temp/ directories on your website.

- Save the file as

robots.txtand upload it to the root directory of your website. - Test your

robots.txtfile to make sure it is working as intended. You can use a tool such as Google's Search Console to see which pages on your website are being blocked from being crawled.

That is it! You have now created a robots.txt file that will instruct web robots how to crawl your website.

How To Check Robots.txt ?

There are several ways you can check the robots.txt file for your website:

- Use a tool like Google Search Console: Google Search Console is a free service provided by Google that allows you to check the robots.txt file for your website and see if there are any issues with it. To check the robots.txt file using Google Search Console, you will need to verify your website with Google first. Once you have done this, you can go to the "Coverage" section of the Search Console and look for any errors or warnings related to the robots.txt file.

- View the file in a web browser: You can view the robots.txt file for any website by adding "/robots.txt" to the end of the website's URL. For example, to view the robots.txt file for "example.com", you would enter "example.com/robots.txt" into your web browser's address bar. This will show you the contents of the file, which will include any rules or directives for bots.

- Use a command-line tool like curl: If you have access to a command-line interface, you can use the curl command to retrieve the contents of the robots.txt file for a website. For example, the following command will retrieve the robots.txt file for "example.com":

- Use an Online SEO tool: Onlineseotool allow you to check the robots.txt file for a website. Simply enter the URL of the website and the tool will retrieve and display the contents of the file.

By using one of these methods, you can check the robots.txt file for your website and see what instructions it contains for web robots. This can be useful if you want to ensure that the file is working correctly or if you need to make any changes to the rules in the file.